all LLMs are overhyped… chatGPT, perplexity, claude, llama3, mistral, mixtral and wizardLM2 [my favorite LLM btw]

Part 0 : LLMs won’t Take “YOUR” Job Away

not now, not anytime soon and definetely not by chatGPT

this is a ELi5 [explain like i’m 5] deep dive into how “Transformers” work

remember all LLM are simply just most advanced “autocomplete” algorithms

Part 1 : How do Transformers Work

this guide is going to skip over the fundamentals of how neural networks can learn almost anything…

PS: I recommend you read this blog post for an intuitive understanding of neural networks

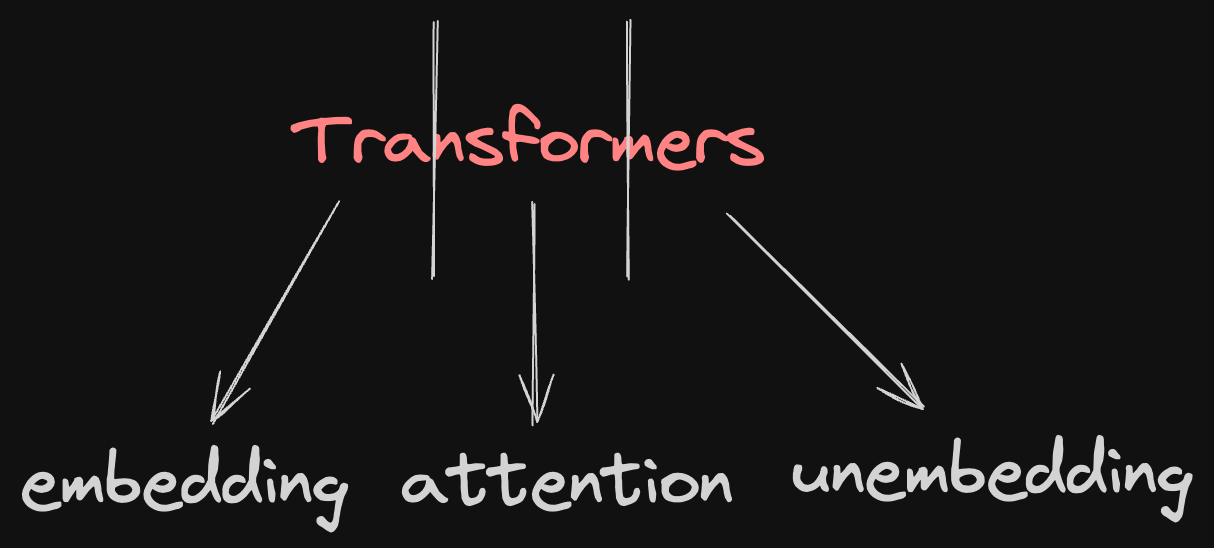

Transformers have 3 major components:

Embedding

all computer algorithms understand numbers… okay atleast numbers in binary

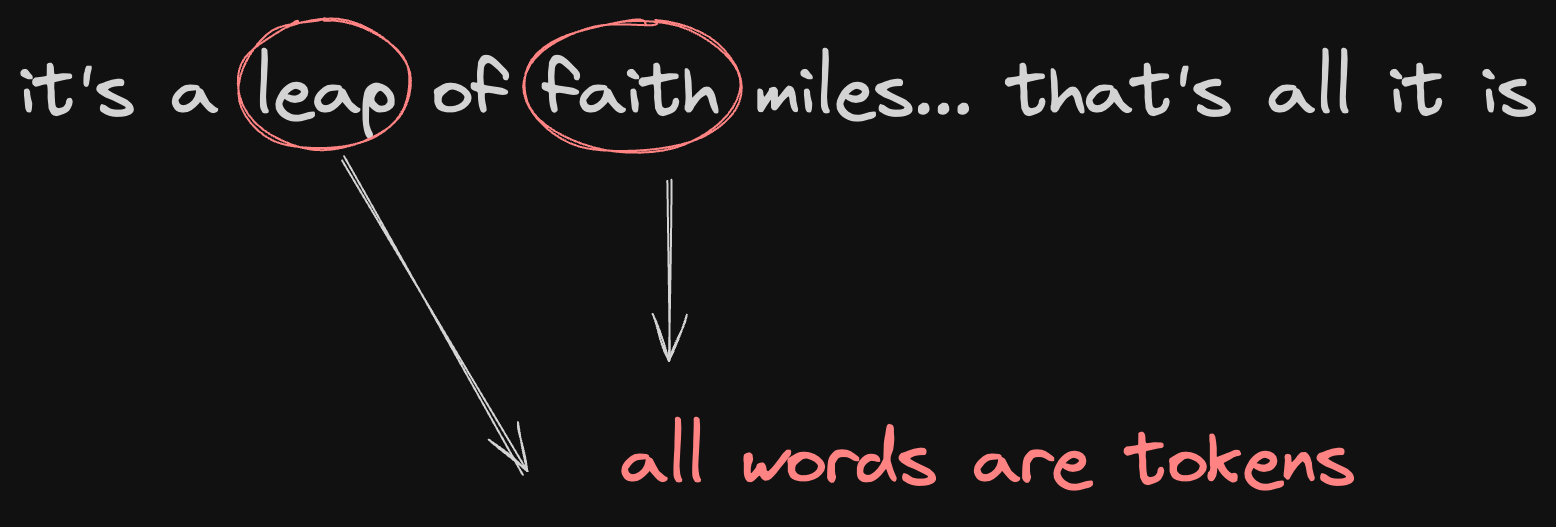

but whenever you interact with an LLM you talk in words… or tokens to be more precise

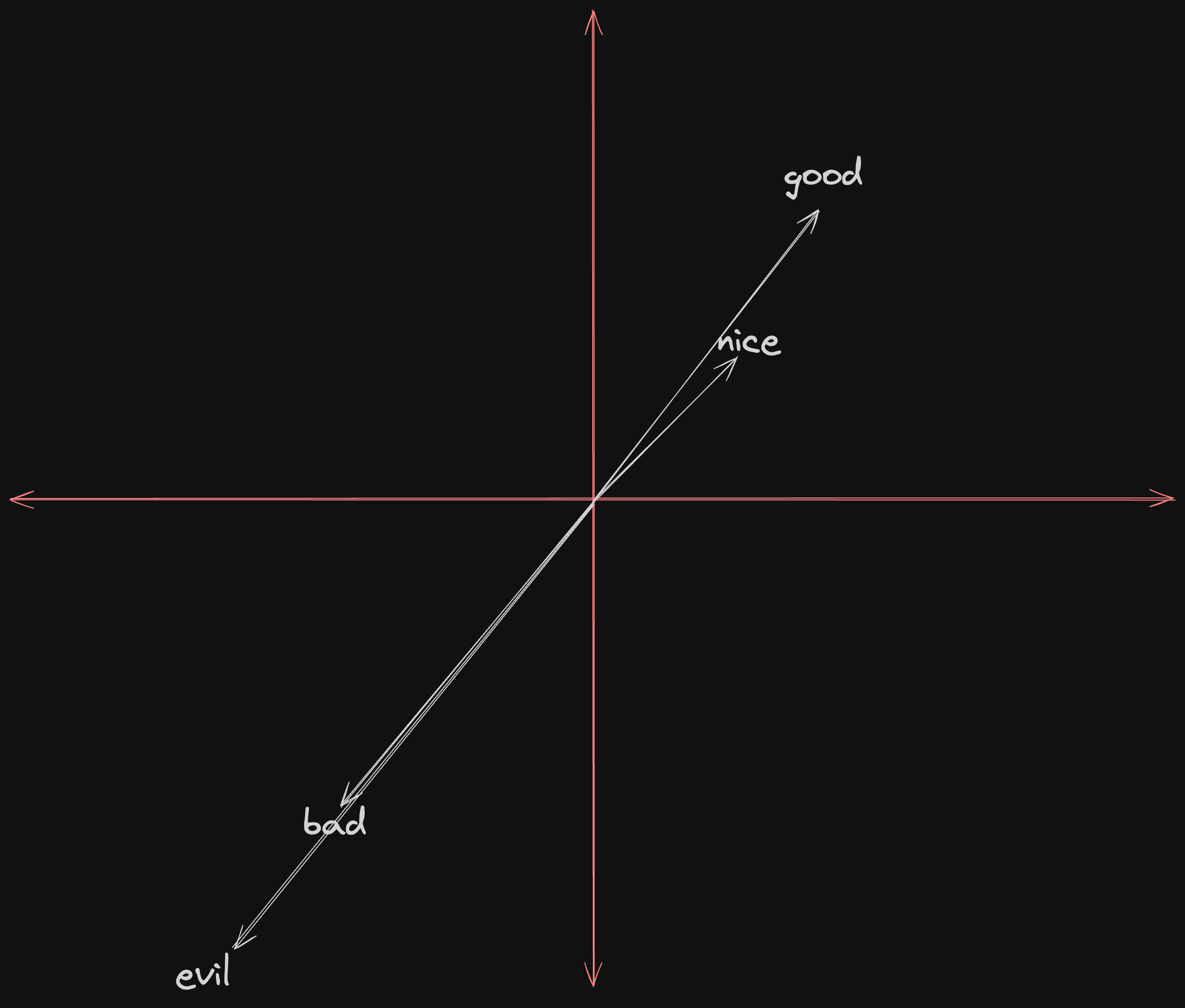

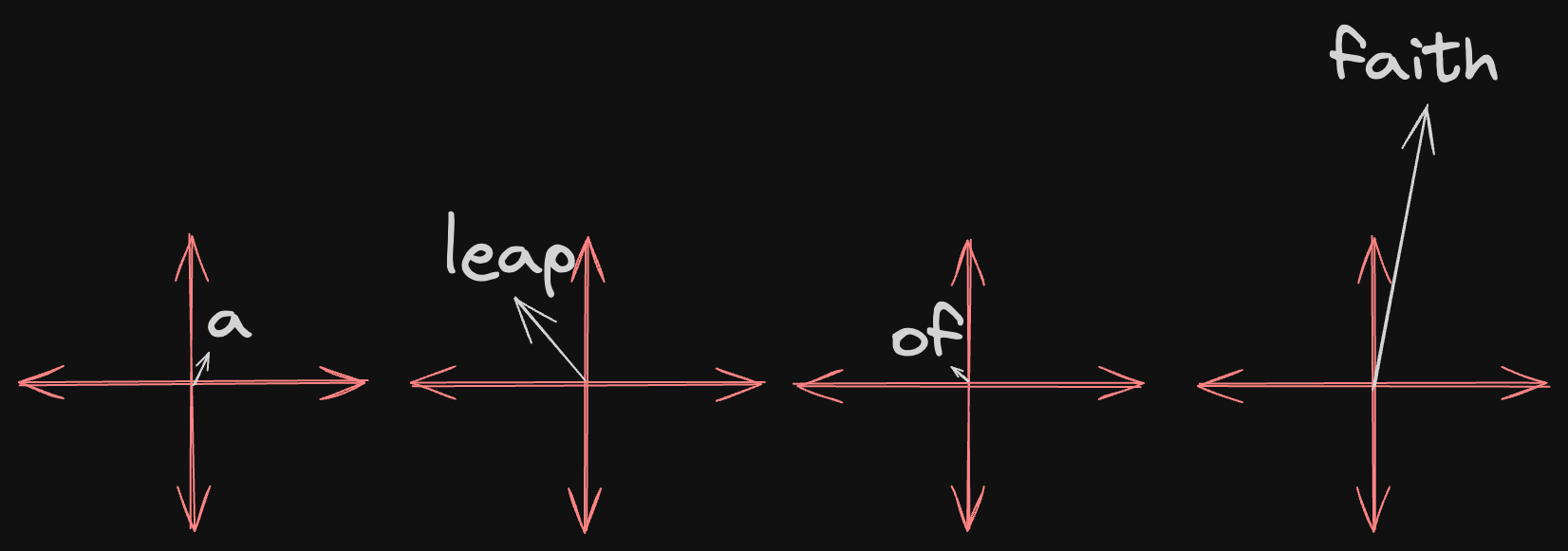

for the LLM to show any signs of intelligence… it needs to be able to understand relationships between 2 tokens and since computers are good with math we do this by plotting the tokens as vectors on a graph

words with similar meaning have similar direction

words with similar intensity have similar magnitute

now imagine having a pre-plotted graph for billions of tokens…

so for each token the transformer looks up the corresponding embedding

Attention

if you’ve followed deep learning for even a while.. chances are you’ve heard of the famous google paper “Attention is all you need”

in language, the meaning of the word often varies with the context that it is used in…

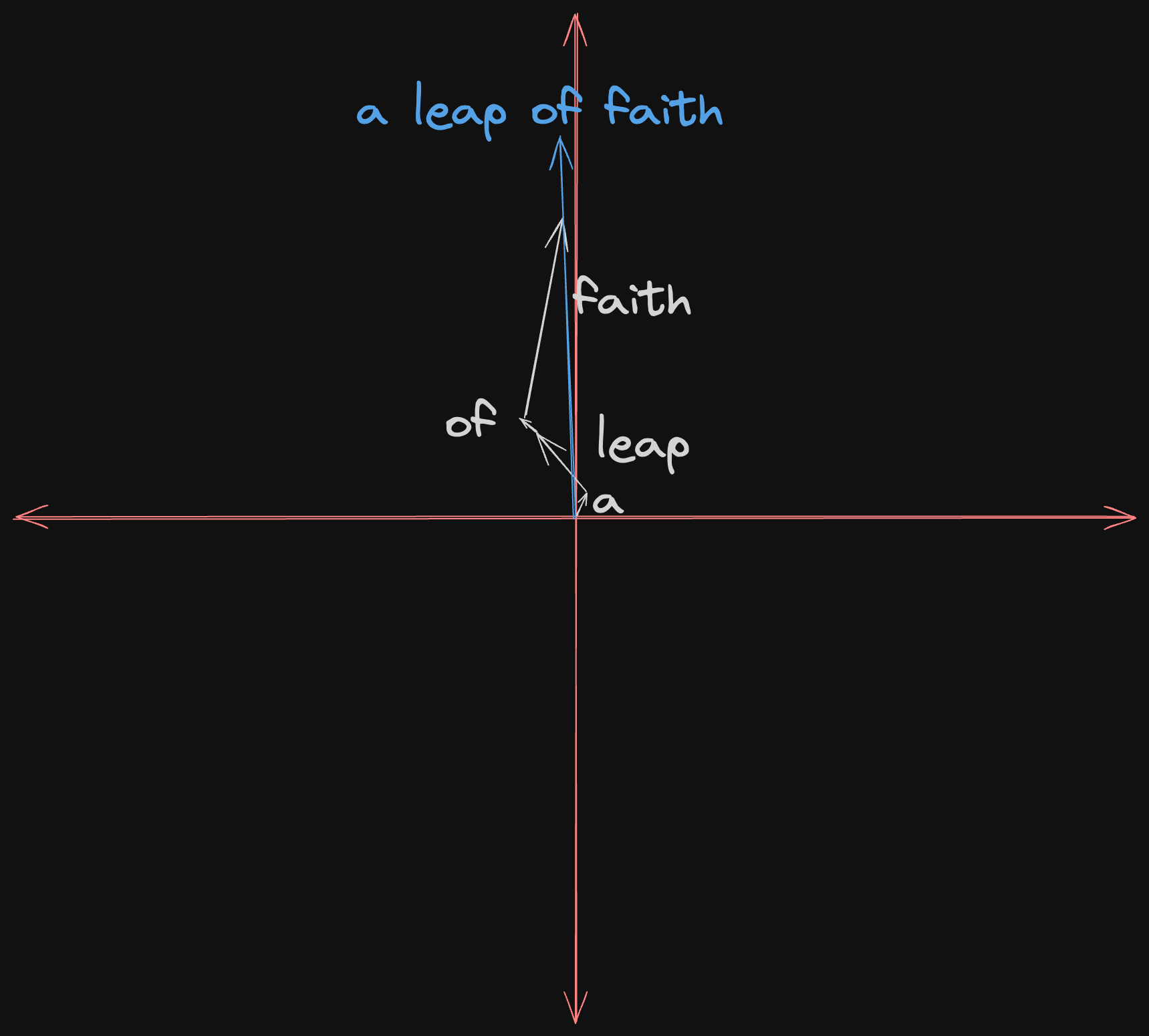

separately the term “a leap of faith” would have embeddings like

but clearly individual embeddings does not contain the whole scemantic meaning of the whole phrase

with some simple matrix multiplication magic the transformer just concatinates the contextual meaning of the whole phrase into an embedded token

Unembedding

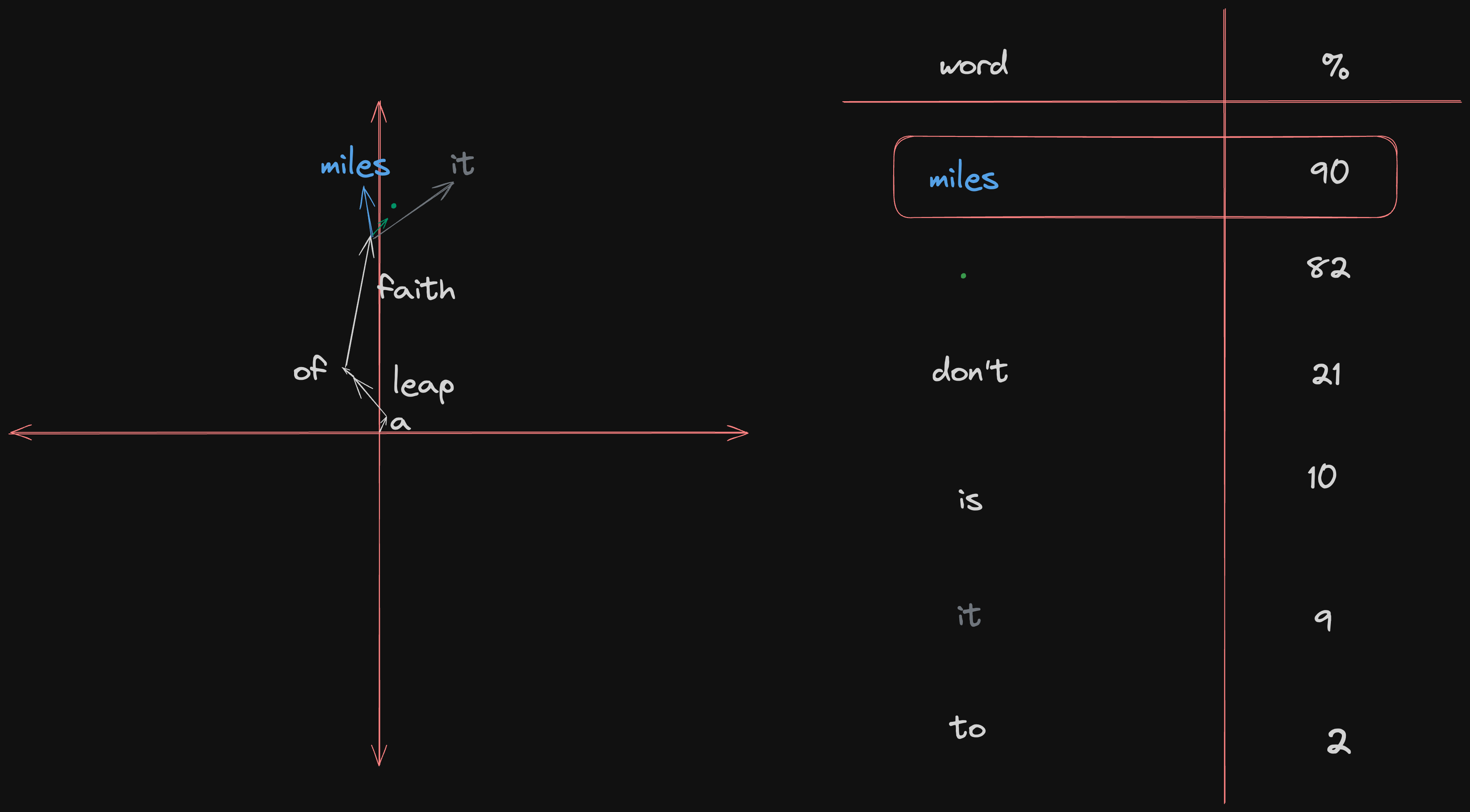

now that the LLM has a contextual understanding of the phrase… next is using a classic statistical algorithm AKA neural networks which is trained on huge data to figure out

- if or not will a next word be present

- what could the next word be based on all the training data that it has seen

LLM chatbots do this on prompt you give it… over and over until the next word the LLM predicts is a NULL

Part 2 - How does chatGPT helps me with my homework

if you think about it… after you’ve understood how transformers work… you might say that LLMs are basically just autocomplete

but you’ve gotten a lot of assistance from chatGPT… it knows how to solve every problem… it knows everything… or atleast it seems to know everything

lemme ask you a question…

let’s say you wanted pizza (prompt) and you went to a pizzeria (chatGPT), which of below is most suited to be the LLM?

- waiter

- chef

- raw food

Click for Answer

1. waiter is the LLM

2. chef doesn't belong in a pizzeria [eg: the blog author who wrote an article on question you asked chatGPT]

3. raw food is the enormous raw data available on the internet

Part 3 - How to build more intelligent AI

now that we have established that current state of the art AI - LLMs like chatGPT are basically autocomplete machines

what is intelligence?

the ability of a system/entity to make a mental map of every input it has experienced PS: maps have O(1) to lookup something

Humans possess intelligence across 21 dimensions [Fun Fact: humans have 21 not 5 senses].

Robots will max demonstrate intelligence in four dimensions [visual, auditory, textual and motor-encoding].

LLMs have only recently expanded to two dimensions [image and textual].

TL;DR research invested in Embedding Systems will give 100x returns

until next time !️ ✌

or you could spot me in the wild 🤭 i mean instagram, twitter, linkedin and maybe even youtube where i may make video versions of these blog posts